4 Key Ways to Simplify Backing Up Large Datasets

The ultimate goal when restoring servers is to recover everything successfully. This is especially important for organizations with large datasets, as you need to determine how you’ll recover your data before you figure out how you’ll back it up. A large dataset is defined as comprising a data footprint of 5 TB or more. However, depending on what kind of data makes up a dataset, two separate 5 TB environments will need different data protection strategies. Backing up large datasets can be a headache, but we’ve put together a list of tips to simplify the process for you.

Incremental Backups

A full backup of a large dataset can take hours, or even days, which can be very impractical. Because of this, incremental backups should be considered, rather than a full backup. With this method, you only back up the data that changed since the last backup was performed. This can be beneficial, especially if your data is changing frequently, as such changes can affect the size of a backup. Through this approach, you’ll cut down on the amount of time needed to complete a backup, while still maintaining the advantages of a full backup.

Scale-Out Systems

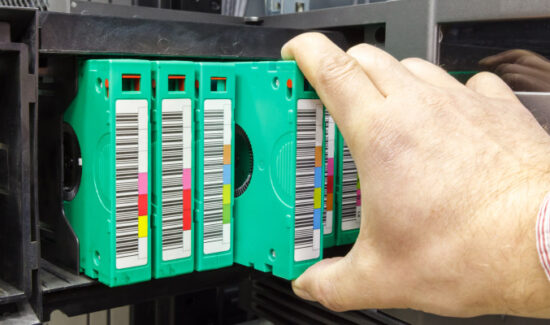

A scale-out backup system can assist in solving performance and capacity problems with large datasets. As your amount of data grows, you can add nodes with additional capacity and performance resources when using a system similar to scale-out NAS. A system like this works well for large datasets because it can offer remote backup, deduplication, and replication at a heightened speed. When you’re able to reduce the amount of data you’re managing and scale your environment, your critical data will be better protected from threats and system failures.

Data Protection

For overall protection against application or user error, snapshots can be a great help when backing up large datasets. A solution that provides an option for local copies of data in order to perform local restores is ideal for large datasets, as these restores are completed faster. Ensuring that you have a local copy as well as an image-based technology that can do snapshots and replications speeds up the backup process.

Data Sleuthing

Before any of the above tactics, it can be beneficial to evaluate the data you’re looking to protect. Consider if protecting such a large amount of data is completely necessary. For example, data that is critical, aligns with the company’s document retention policy, or is required by a federal or industry regulation must be protected. However, data that does not fall under those categories does not need to be part of your standard backup schedule. Taking the kind of data you’re backing up into consideration allows you to conserve bandwidth and gain flexibility throughout the process.

Backing up large datasets can be a difficult operation. However, this process is not impossible. By putting the above tactics into practice, you’ll simplify what can be a tedious and time-consuming operation, thereby protecting your data with minimal complications.

Looking for more information on backup and disaster recovery solutions? Consider downloading our Backup and Disaster Recovery Buyer’s Guide! This free resource gives you the ability to compare the top 23 products available on the market with full page vendor profiles. The guide also offers five questions to ask yourself and five questions to ask your software provider before purchasing. It’s the best resource for anyone looking to find the right backup and disaster recovery solution for their organization. Additionally, consider consulting our Disaster Recovery as a Service Buyer’s Guide, as well as our new Data Protection Vendor Map, to assist you in selecting the right solution for your business.