Rooting Out Bias in Automated Processes

As part of Solutions Review’s Expert Insights Series—a collection of contributed articles written by industry experts in enterprise software categories—Bernd Ruecker, the co-founder and chief technologist at Camunda, explains how companies can begin to root out the bias in their automated processes.

Process automation is gaining traction across industries because enterprises see the many benefits it can provide in an increasingly competitive business environment. It speeds up innovation, improves productivity, and frees employees to take on more complex strategic jobs. One in three IT professionals says their process automation investments at least paid for themselves within a year.

Process automation is gaining traction across industries because enterprises see the many benefits it can provide in an increasingly competitive business environment. It speeds up innovation, improves productivity, and frees employees to take on more complex strategic jobs. One in three IT professionals says their process automation investments at least paid for themselves within a year.

Done well, process automation is a valuable tool. But what if the automated processes perpetuate existing biases that surfaced only occasionally in manual processes? How can you avoid bias sneaking into your algorithms behind process automation?

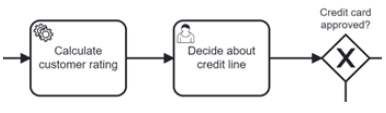

Let’s disentangle this important question using the example of issuing credit cards. This is a process that can be visualized using the standardized Business Process Model and Notation (BPMN):

Source: Camunda

There are two great things about BPMN. First, this model can be directly executed by process orchestration tools. Those tools will then coordinate all credit card requests and integrate the right systems at the right time.

Second, the visual model helps to understand the process. As this is the executed model, the visual is not wishful thinking but IT reality. And to eliminate bias, transparency and visibility for various stakeholders are essential. In this example, we can assume the process will not contain bias. At the same time, we can suspect that specific decisions within single tasks—like the customer rating or the decision about the credit line—can contain a level of bias.

Bias is typically found in decision logic

This is a common pattern: Bias is typically found in the decision logic but not so much in the process control flow. While this sounds obvious, it offers clues about how bias can creep into such decisions. A typical customer journey looks like this: In early maturity stages around automation, decisions are made manually. A human looks at all the customer data and makes a decision about the credit limit based on specific rules enriched with their gut feeling (called experience). This gut feeling might introduce bias in the first place. Humans possess the capacity to exhibit 180 different types of bias.

When automating a decision like this, many companies are looking into machine learning (ML) and artificial intelligence (AI). In doing so, they will use historical decision data to train the machine learning model. While this is a complicated task, it’s easy to understand how things will turn out: If you teach something with biased data, it will learn that bias.

It can create business rule biases that can perpetuate themselves in business practices. If a bank trains systems to accept one set of documents and reject similar documents, it can discriminate against people applying for loans. If a recruiter creates rules with tight qualification criteria, a firm could favor one group of applicants over another.

A famous example of bias in credit line decisions can be found in the outcry around the Apple Card in 2019. Many reported that the female spouses were awarded much smaller credit lines than their male counterparts (up to 20x different). A lot was written about the Apple Card case, and there is not 100 percent clarity on how the bias ended up in their decision system. But it’s easy to understand the damage this does to a company’s reputation beyond the impact of potential lawsuits.

One important takeaway: A black-box decision has the disadvantage that nobody can easily assess it and check for biases.

Making decision logic accessible to a diverse set of people

Is there a different path to follow? One possibility is to hand-craft the decision model for credit lines. I find the standardized decision model and notation (DMN) very helpful, as it can visually express decision tables but, like BPMN, can also be executed on suitable software. In the case of the above example, a decision table might look like the following:

Source: Camunda

Of course, just creating such a decision table does not eliminate bias. Analysts or developers creating this model are also humans and might include their own biases within that model. But this visual way of describing decision logic enables a proper review by different people. It is up to your organization to anchor an approach where relevant decision models are systematically checked for biases by a diverse set of people. The process models sketched above can help identify sensitive decisions.

You may think your decisions are too complex to be put into simple decision tables. My experience is that this is a clear yes and no. I agree that it will be hard to put all the nuts and bolts around a credit line decision into such a table. At the same time, I have seen insurance companies pull hundreds of parameters into thousands of rules using DMN and be very happy with the process. Looking at the Apple Card disaster, it might also be a matter of priority. More effort in defining such a rule base would have paid off, especially as this could allow the company to easily pinpoint and fix biases that might have been missed in the first place.

Of course, this approach will not magically eliminate any bias. But the visibility of decision logic and awareness around the importance of eliminating bias in the organization will help you a good part of the way.

Five tactics to remove bias

To summarize, here are five tactics that can help your organization to remove bias in automated processes:

- Visualize process models (e.g., using directly executable BPMNs) to allow identifying sensible decisions.

- Move important decision logic to executable models that are business readable (like DMN).

- Allow proper reviews by graphical models available to different stakeholders, ideally flanked by collaborative tooling to make it easy to pull those people in. Those models should be directly executable to ensure people look at the “as-is” and not some documentation detached from the actual implementation.

- Anchor those reviews in the software development approach and enforce that it is happening. Involve a diverse set of people to minimize bias.

- Analyze data of past decisions and process runs to identify biases, which can then be addressed by improving decision logic.

Automated processes can help generate business success. But if the automated processes themselves are flawed, automation initiatives can hold organizations back. Rooting out biases early, before they can be integrated into business practices, puts organizations in a position to take advantage of process automation’s benefits.