Four Factors to Consider When Selecting a Storage Provider

Solutions Review’s Contributed Content Series is a collection of contributed articles written by thought leaders in enterprise tech. In this feature, Quantum‘s Skip Levens offers key factors to consider when selecting a storage provider.

Organizations need the right storage infrastructure in place now if they hope to keep pace with the rise of unstructured data, AI, and advanced analytics solutions that will be a key success factor for enterprises in the “Age of AI.” Organizations are realizing they need to retain all, not just some, of their data in order to fuel and capitalize on these new applications, as well as derive insights held in that data as their tools and AI models get smarter.

Organizations need the right storage infrastructure in place now if they hope to keep pace with the rise of unstructured data, AI, and advanced analytics solutions that will be a key success factor for enterprises in the “Age of AI.” Organizations are realizing they need to retain all, not just some, of their data in order to fuel and capitalize on these new applications, as well as derive insights held in that data as their tools and AI models get smarter.

By 2025, the amount of data created is forecasted to reach 181 zettabytes, a staggeringly large number, and over double the 79 zettabytes forecasted just last year. This data has to be retained securely and for the long-term to drive innovation and insights, which demands a cost-effective, scalable, flexible, and secure end-to-end storage infrastructure.

There are traditional options like on-prem hardware, fully remote cloud-based options, or a combination of the two. How can an organization select the right solution that will help them grow, especially in a rapidly changing competitive landscape? Here are a few things to consider when you’re seeking out the right storage infrastructure for your organization.

Scalability

As data growth continues and future requirements only continue to expand, organizations must have an easily scalable infrastructure that can grow with needs over time. For example, with the rise of AI, more data is needed to power this technology – if your data sets can be recalled for use by your data crunching apps and AI models faster, you’ll get faster insight and payoff. And organizations that collect and use their own unique data—like support calls, video clips, customer stories, research data, and more – will jump ahead of other companies and build more efficient and productive AI tools highly customized to your exact environment.

Finding a software solution that can be deployed on any standard hardware makes scaling easier, and is the smart approach – you’re banking on the market forces and competition of “supply chain friendly hardware” providers to keep cost competitive, instead of customized hardware such as NVMe drives that rely on bespoke pieces, and low or single vendor count suppliers.

Flexibility

Each organization requires a storage solution that can adapt to and fit their varied and everchanging demands. Developing a hybrid cloud environment allows organizations to meet dynamic workflow goals driven by accessibility, protection, and budget, and make shifts where the workflow (and budget) make sense, in your own data center, in the cloud, or a mix of both. It’s important to consider the “where” and “when” of data—you should be able to place your data where it needs to go but still access it when it’s needed, which calls for a flexible solution that enables an organization to easily move and store data in your own facility, in the cloud or at the edge. By deploying a hybrid cloud environment, organizations get a flexible infrastructure that enables them to place data where it’s needed, and in the most cost-effective way possible.

Accessibility

While some data historically requires high, ongoing performance and easy accessibility and is therefore stored accordingly, other data is archived and untouched for years or decades. Now, though, that data needs to be ‘discoverable’, what was previously deemed unimportant could serve any number of key purposes as technology and analytics and AI models continue to develop. Because of this, all data is now cyclical, and all data can be used, archived, reused, and analyzed again for insights. To serve this purpose, data must remain easily accessible and searchable. By deploying a “smart” infrastructure that uses AI to tag, catalog, index, and organize your data, it’ll be that much easier to search, find, enrich, and repurpose to drive business insights and innovation.

Sustainability

With the effects of climate change becoming more and more apparent, organizations of all sizes are feeling the pressure to help mitigate the impact, show corporate responsibility, with the added benefit of keeping costs low. Data storage and server farms have become a growing concern as they explode in size, and these facilities currently account for about 1 percent of global energy-related greenhouse gas emissions. This percentage is only expected to increase as data generation continues to grow.

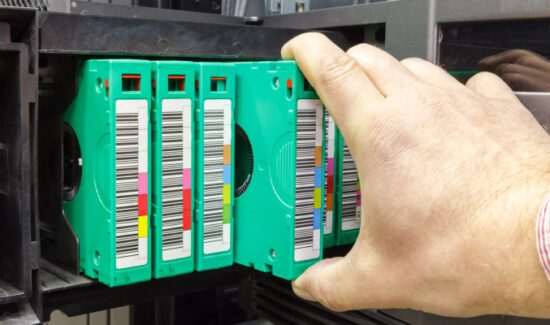

A high-density storage solution consumes less power, cooling, and rack space when compared to a traditional server farm. Solid State Drives (SSDs) generally have higher data density, followed by tape storage and hard disk drives (HDDs). Then there’s flash storage, which is an option that is both speedy and cost-effective. Flash has the benefit of being faster than other data storage while also consuming significantly less power for operating and cooling. Finally, for data that doesn’t need to be accessed regularly, tape libraries offer low-power, long-term storage. By investing in high-density and flash storage systems in the combination that makes the most sense for them, organizations can begin to make an impact on the carbon and greenhouse gas emissions stemming from data centers.

Choosing the Best Solution for Today and Tomorrow

While technology is constantly changing and needs are evolving, one this is certain: data growth will continue to increase. Organizations that consider a storage infrastructure that can evolve with their needs over the long-term will be in the best position to manage and capitalize on that data growth. With the right solution in place, organizations will be prepared to grow alongside their data, create value from their largest data sets, and fuel innovation.

- Four Factors to Consider When Selecting a Storage Provider - January 18, 2024