Rethinking Security in the Age of Agentic AI

James White, the President and Chief Technology Officer at CalypsoAI, recently commented on why companies need to rethink their security initiatives in the age of agentic AI. This article originally appeared in Insight Jam, an enterprise IT community that enables human conversation on AI.

Enterprise investment in AI is rocketing, and the arrival of AI agents means that trend is likely to accelerate. By 2028, at least 15 percent of day-to-day work decisions will be made autonomously through AI agents, and 33 percent of enterprise software applications will include agentic AI, up from less than 1 percent in 2024.

Enterprise investment in AI is rocketing, and the arrival of AI agents means that trend is likely to accelerate. By 2028, at least 15 percent of day-to-day work decisions will be made autonomously through AI agents, and 33 percent of enterprise software applications will include agentic AI, up from less than 1 percent in 2024.

While agentic AI promises a major leap in efficiency—reducing the need for human involvement in certain tasks—it also presents significant new security risks. Crucially, those risks are either not very well understood or are being overlooked in the race for cost savings and productivity gains.

An AI agent effectively has three layers: its specific instructions, which give the agent its purpose; the underlying AI model, which acts as its brain; and the tools that the agent’s owner has made accessible to it. The agent works on the invisible interactions between the layers, but those interactions hide vulnerabilities that businesses have never encountered before.

Compounding the issue, any change to any of the agent’s layers—such as a model update or the addition of access to extra tools—opens up new attack surfaces for bad actors to target. Depending on the use case, the consequences of a failure or attack on an AI system range from not ideal to disastrous; consider the hacking of an agent authorized to carry out bank transfers as an example.

Putting AI on the Offensive

Incredibly, even the top companies in AI model development still rely heavily on human-centric testing of their technologies. Manual red-teaming exercises can span weeks or months, resulting in a stale and incomplete understanding of current risks. In a world where each AI agent is equipped and tasked differently—and has the ability to learn—that approach is hopelessly outdated.

So, how can businesses move forward with AI without opening themselves up to substantial risk? The answer lies in using AI itself to understand and address the risks involved in using agents.

CalypsoAI has developed Agentic Warfare, our description for using automated red-teaming to simulate real-world adversarial interactions and test the endless permutations arising from the introduction of agents. This multi-turn, customizable attack capability allows enterprises to find and plug hidden security gaps in AI systems, both before they are deployed and on an ongoing basis.

In recent testing, the agentic warfare capability in CalypsoAI’s Inference Red Team product found vulnerabilities in all the world’s leading foundation models. As enterprises rush to deploy new technologies built upon these models, understanding the relationship between performance, cost, and security risks has never been more critical.

Despite this, many companies and security experts still use Attack Success Rate (ASR) as the metric to assess model safety. However, this metric is oversimplified and fails to account for attack complexity and if the threats really matter, meaning enterprises do not get the insights they need to establish an effective perimeter around their AI systems.

The CalypsoAI Security Index

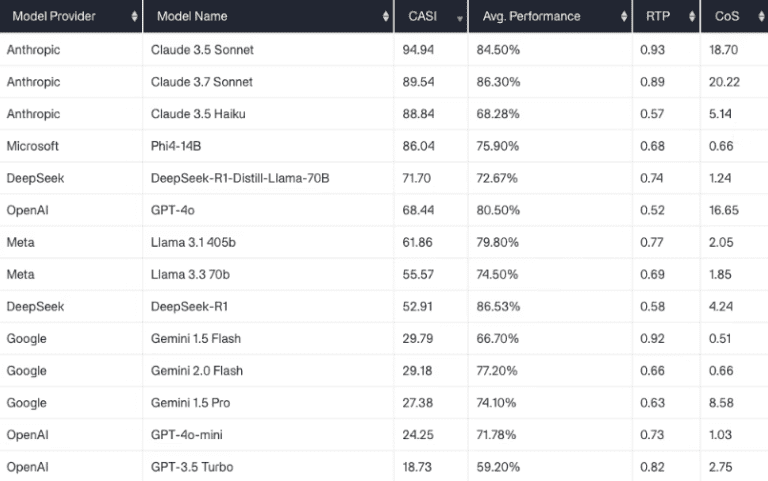

To address this gap, CalypsoAI has introduced the CalypsoAI Security Index, an entirely new metric to evaluate the security of AI models based on their ability to withstand advanced agentic warfare attacks. Our Leaderboard includes a Risk-to-Performance (RTP) ratio and a Cost of Security (CoS) metric so companies can understand the security risks of each model and their impact on cost and performance.

At this point, Anthropic’s Claude 3.5 Sonnet tops the leaderboard, while certain models from Google and OpenAI score poorly when security is measured against performance and cost. The CalypsoAI Leaderboard will be revised regularly as new models are released.

With AI evolving at an unprecedented rate and enterprise adoption firmly established, understanding the risks is paramount. An enterprise that uses AI built upon a high-performing but relatively insecure model runs an increased risk of being attacked, jeopardizing its entire AI investment and any expected returns, as well as causing reputational damage to the organization.

Following these benchmarks, enterprises can now truly understand the security risks of each AI model, allowing them to build out projects safely and confidently. For agentic AI to reach its potential, security must be recognized as an enabler of innovation, not an impediment.