Agentic Data Management and Data Observability: Autonomous Agents Arrive Just in Time

Sponsored by Acceldata

Data engineers struggle mightily to prepare and deliver data to voracious consumers that range from executives to line managers, analysts, data scientists, and of course AI agents. Given the scale and complexity of modern analytics initiatives, it’s no surprise that 69 percent of 131 respondents to a recent LinkedIn poll expect demand for data engineers to rise next year even as AI reduces the need for other software roles.

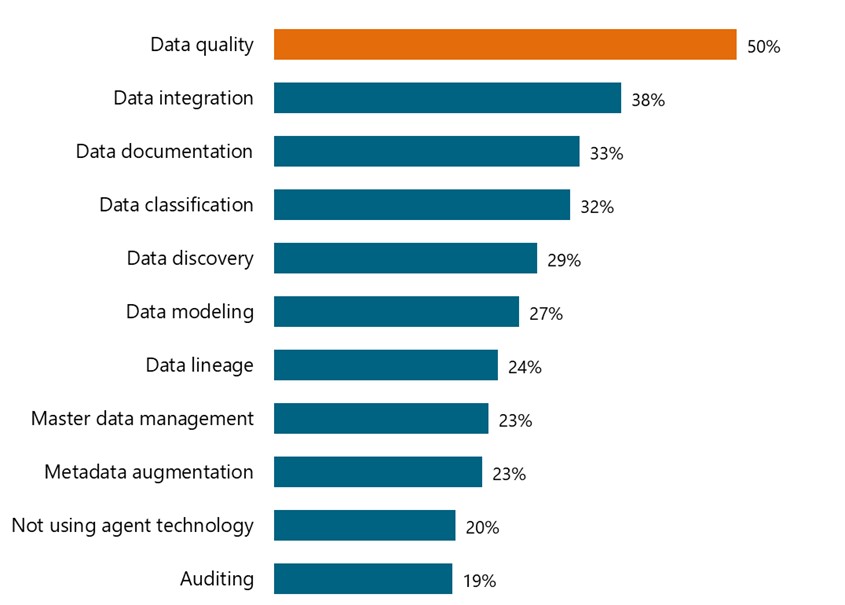

It’s also no surprise that data engineers and their colleagues have a high interest in getting assistance from… you guessed it: AI agents. A recent BARC survey shows that 80 percent of organizations already use agentic tools to manage data in some fashion. When asked to rank use cases for agentic data management, half selected data quality, followed by data integration (38 percent), documentation (33 percent), and classification (32 percent).

Is your organization using or considering using AI agents for the following aspects of data management? (n=305)

Data quality tops the list because it poses the most serious obstacle to the success of analytics. Tables and other data objects can misrepresent reality with outdated, incomplete, inconsistent, or otherwise inaccurate facts. This leads business stakeholders to make suboptimal decisions, machine learning models to make bad predictions, and GenAI models to hallucinate. Data engineers therefore spend countless hours finding and fixing data quality issues as use cases proliferate, sources multiply, and volumes rise.

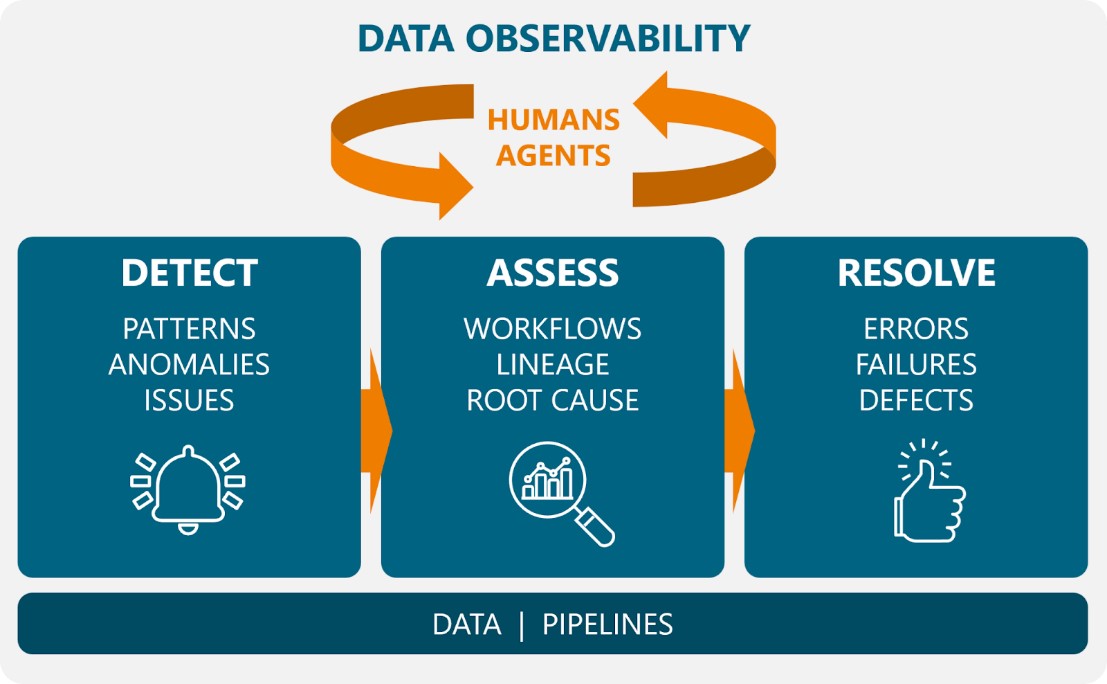

Data teams, including data engineers and sometimes data stewards, optimize data quality across a data observability lifecycle of three stages: detect, assess, and resolve. Let’s define each stage, then explore how agentic tools from vendors such as Acceldata streamline the process while engaging data teams through a natural language interface.

Detect. During this stage, data teams identify patterns in a variety of signals, including data value ranges, schema changes, volume, latency, and throughput. They also detect anomalies (i.e., pattern breaks) and issues such as null values or system errors. Anomalies and issues need attention.

An agentic data observability platform helps by continuously monitoring all these signals and flagging unusual behavior based on learned patterns and human-defined thresholds. It describes issues and recommends next steps for the data engineer to approve, revise, or reject.

Assess. During this stage, data teams prioritize issues by measuring business impact in terms of affected users, revenue, etc. They assess workflows, for example by tracking the sequence of events related to a null value or system error. They also inspect lineage by identifying all the users and pipeline actions that touch a given dataset. These assessments enable data teams to identify the root cause of the issue.

An agentic platform helps by calculating impact scores, tracing workflows and lineage, and correlating events to propose likely root causes for the data engineer to consider. It offers hypotheses, ranked by confidence level, along with supporting evidence. This gives the data engineer a decision tree and supporting action plan for each branch.

Resolve. Once data teams find the culprit, perhaps a pipeline configuration error, on-prem server failure, or defective transformation script, they work to resolve the issue. They reconfigure the pipeline, reboot the server, or debug the transformation script, all to minimize downstream damage to the business.

The agentic platform proposes remediation steps, such as configuration updates or software patches, based on predefined policies and human-approved rules. With the blessing of the data engineer, it executes the remediation plan and reports back on the outcome.

Agentic tools can do more than just enforce technical data quality rules. Designed and implemented well, they also learn to create rules or even higher-level governance policies. If certain pipeline configurations frequently fall below performance thresholds, the agentic tool can recommend new configuration rules. If a certain source-model combination correlates to frequent null values in the outputs, the agentic tool can devise a rule requiring other combinations. As they accumulate knowledge over time, agentic tools might create higher-level policies for data stewardship or propose new pipeline performance SLAs. This is where agentic tools get very interesting in the governance realm.

Getting Started

Agentic data management platforms offer a timely method for data engineers and their colleagues to keep pace with rising demand for all types of analytics. They help optimize data quality by detecting, assessing, and resolving issues faster, and free data engineers to focus on higher-value design and governance work. To get started on this journey, data leaders can prioritize use cases for agentic data management and define evaluation criteria for agentic tools. And to learn more about an example platform, they can check out Acceldata.

- by

- by